Beyond APIs: Software interfaces in the agent era

For decades, APIs have been the standard for connecting software systems. Whether REST, gRPC, or GraphQL, APIs follow the same principle: well-structured interfaces that are defined ahead of time to expose data and functionality to third parties. But as AI Agents start taking on more autonomous operations this rigid model is limiting what they can do.

APIs work well when requirements are known in advance, but agents often lack full context at the start. They explore, iterate and adapt based on their goals and real-time learning. Relying solely on predefined API calls can restrict an agent’s ability to interact dynamically with software.

Like many in our industry, we have been dealing a lot with the challenges of agent to software interfaces. We think the future of these interfaces will move beyond static APIs toward more flexible, expressive, and adaptive mechanisms. More on our thinking below, we’d love to hear your thoughts!

The limitations of APIs for agents

APIs are designed for predictable, developer-driven interactions. The developer writes a request, expects a response, and handles errors explicitly. While this works well for traditional software integrations, it introduces several friction points when applied to autonomous agents operating in dynamic environments.

1. Rigid interfaces don’t work well with dynamic reasoning

APIs define fixed contracts with specific endpoints, request formats, and outputs. But agents operate in a less deterministic way. Whilst an API structure may work well for one use case another may be completely impossible given the set of endpoints and data the API exposes. For example, an API might provide a fetch_customer_data(id) function, but an agent will likely not start with an ID, but perhaps a name or email. This forces agents to reason about chaining multiple API calls for tasks that could be a single step.

2. API interfaces need to be integrated ahead of time

To achieve good performance APIs often need to be wrapped in a tool that is passed to the agent. This means that work is needed ahead of time to integrate the API. Again this limits what the agent can achieve. It is unable to discover and integrate APIs it needs at runtime based on the task that it has been asked to achieve.

Even for coding agents the requirements for authentication and documentation require some work to integrate the API ahead of time. Not to mention that for a production system your agent needs to be able to handle a whole other set of software engineering concerns, for example pagination, caching and rate-limiting

3. Handling errors and change management

In traditional software, API failures are usually logged and errors are returned to the caller. But agents can dynamically react to errors and adjust their path toward the goal. This is a completely new paradigm and one of the advantages of agents, but existing APIs often don’t provide enough information or direction for the agent to plan how it will recover from an encountered error effectively.

Likewise, having done all the work to integrate an API, your agent is tied to the current implementation. Any versioning changes or worse breaking changes will mean your shiny agent is now functionally broken.

What would good look like?

If APIs aren’t enough, what does an agent-first interface look like? Instead of rigid, predefined endpoints, future agents to software interfaces should be adaptive, declarative, and goal-oriented. Rather than requiring agents to conform to static API contracts, the software itself should expose capabilities in a way that agents can reason about, compose, and execute dynamically.

1. Self describing and discoverable Interfaces

Agents should not need hardcoded API specifications or be coded ahead of time to connect to them. Instead, it should be possible for agents to describe available actions, parameters, and expected outputs on the third party software. This could include:

- Schema-based discovery - agents should be able to discover and call third party systems dynamically. This means they should be able to connect, list available functionality and integrate with them all at runtime.

- Execution hints - Interfaces should provide execution hints beyond basic documentation. For example, “This action requires a valid session token” or “This function is expensive, use sparingly”.

- Fine grained agent native authentication - Most APIs authentication and authorization controls are designed around human users who sign up ahead of time. Future software to agent interfaces should allow an agent to securely sign up and have sensible authorization controls for what they can do. We’ve explored how we think authentication might evolve here (↗).

2. Flexible, goal-oriented invocation

Today callers of an API need to work out which set of APIs to call to achieve their goal. But in the future agents should be able to express intent, and the system should handle how to achieve the goal. This would mean software that exposes declarative interfaces where the agent specifies what it wants to achieve, and the system determines how.

3. Robust error handling and introspection

Instead of returning opaque error codes, software should provide rich, structured feedback that agents can reason about. This could include execution hints that nudge agents towards other approaches (This API requires X, you can get it by calling API Y) or clear guidance if the error can’t be worked around. It would also likely involve providing additional context in the error response so in the case a human needs to be included in the resolution, simple steps can be provided to them.

4. Support for parallel execution

Agents benefit from running multiple actions in parallel but this isn’t a model many APIs support. Pagination for example is an area where the design of the API has a big impact on how easily the agent can fetch data in parallel. Page based APIs (GET /blogs?page=1) are easier to load in parallel then than token based ones (GET /blogs?token=1s2fsf) where the result of the current page is needed to load the next one.

The Path Forward

To move beyond APIs, we need to rethink how software communicates its capabilities to agents in a way that is flexible, interpretable, and adaptable. The goal isn’t to replace APIs outright but to evolve toward a model where agents don’t just consume endpoints—they understand and navigate software functionality intelligently.

Let’s explore some real-world approaches and frameworks pushing toward this future.

1. LLM assisted APIs aka Tools

A feature of some agentic frameworks (like Portia AI) is to wrap the APIs that are provided to the agent in a level of LLM smarts. This gives more flexibility in how the tool is called.

Examples:

- If the format of a timestamp in an input field has changed the LLM can try again with the correct format as long as the API returns a descriptive error field.

- If the name of a field in the response has changed the LLM can still use the response without needing a change to the tool definition.

@tool("get_posts_since", args_schema=GetPostsInput, return_direct=True)

def get_posts_since(timestamp: str) -> dict[str, Any]:

url = f"https://my_hosted_blog.com/posts?since={timestamp}"

try:

response = requests.get(url, timeout=5)

response.raise_for_status() # Raise an error for non-200 responses

return response.json()

except requests.RequestException as e:

return f"Error fetching posts: {str(e)}"

✅ Pros:

- The agent only has to reason about function signatures rather than full API calls.

- Allows some flexibility for the agent to try new approaches and recover from some errors.

- The agent can handle extraction of the relevant data from the response itself.

❌ Cons:

- Still requires explicit coding of the functions ahead of time.

- No built-in reasoning about dependencies or execution order which is usually required with REST APIs.

2. Generating Code Instead of Calling APIs

Instead of integrating directly with APIs, agents can generate and execute code using SDKs that wrap underlying functionality. This is common today for AI-assisted development, where models write API client code dynamically instead of calling endpoints directly.

Example:

An LLM generates a Python snippet using a cloud provider’s SDK instead of making raw API requests:

import boto3

s3 = boto3.client("s3")

s3.upload_file("report.pdf", "my-bucket", "report.pdf")

✅ Pros:

- Leverages existing SDK ecosystems (AWS SDK, Google Cloud SDK, etc.).

- Gives agents more control over execution (e.g., handling exceptions, retries).

- More resilient to API changes as the SDK abstracts away versioning differences.

❌ Cons:

- Requires agents to understand and generate valid code. Depending on the goal this might require accurately calling several functions within the SDK.

- Performance relies on well documented SDK which work exactly as described.

- Execution environments need to be properly sandboxed to prevent rogue code being executed. Either you have no sandboxing which is a security nightmare in production or you sandbox the code that is generated but this is an engineering challenge and limits functionality.

3. Computer and / or browser use

Some agents bypass APIs entirely, interacting with software through web browsers just like humans do.

Examples:

- Instead of integrating with a banking API, an agent navigates the bank’s website, logging in, clicking buttons, and extracting data from web pages.

- Browser agents go beyond basic automation tools like Selenium, Playwright, and Puppeteer by leveraging multi-modal discovery of web pages and reasoning to navigate them.

✅ Pros:

- Works even when APIs aren’t available.

- Mimics real human interactions, reducing integration friction.

❌ Cons:

- Can be more fragile as changes to UI or new pop ups can break automation and are far more common than API changes.

- The approach is orders of magnitude slower than API access and also far more expensive as more LLM usage is needed to guide the computer usage

- Can be a challenge to deal with authentication, a lot of energy has been expended in the last decade trying to prevent automation from interacting with websites (i.e. Captchas). We tinkered with browser agents a few months back. Check it out here if you're interested(↗) and keep an eye on a fresh look in the coming weeks!

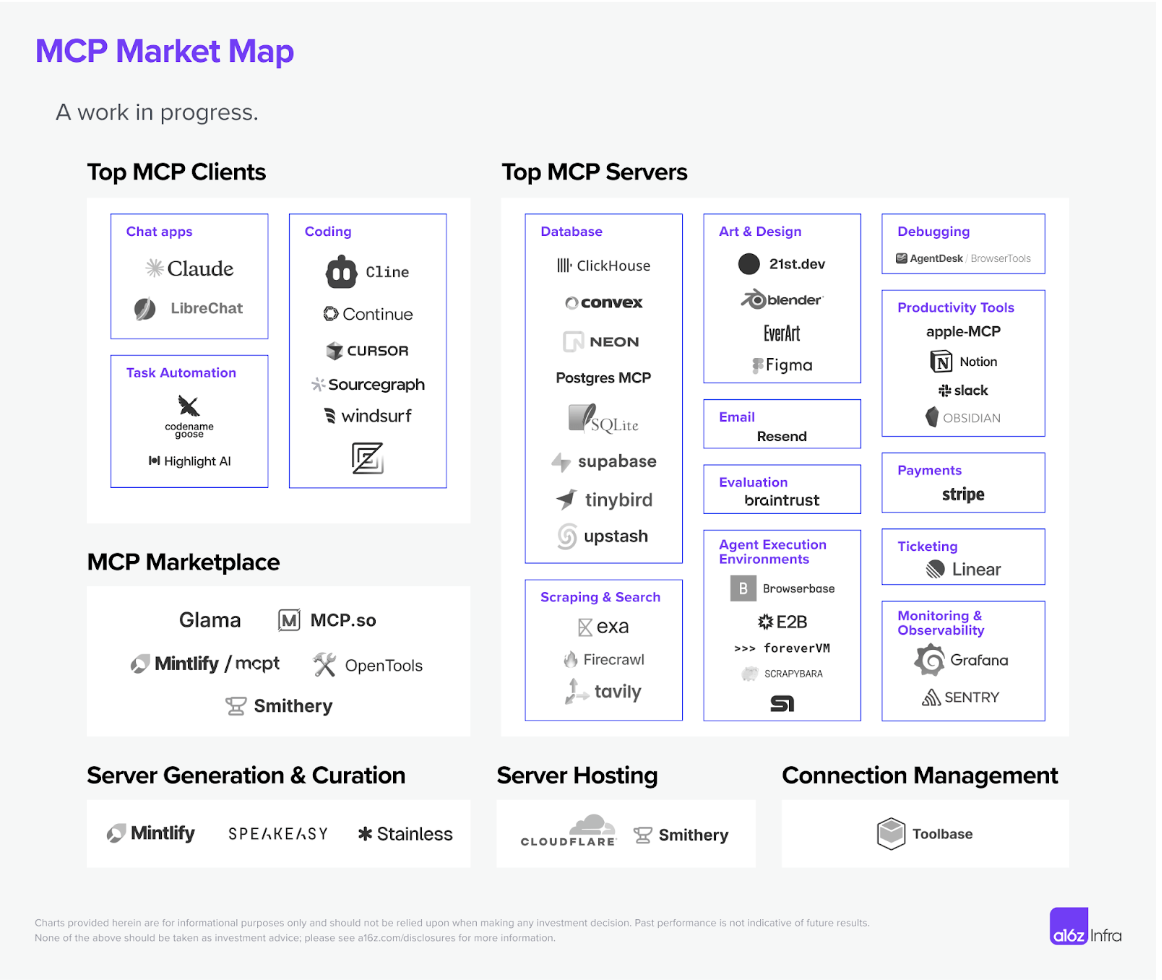

4. Dynamically discoverable tools

One of the most promising directions for agent-software interaction is Model Context Protocol (or MCP), which emphasizes dynamic tool discovery, self-registration, and agent-to-agent collaboration. Instead of hardcoded integrations, software components expose self-describing capabilities that agents can discover and use on demand. We’ve written about our experience using MCP here (↗).

Examples:

-

An agent contacts an MCP registry and lists a set of MCP servers relevant to its task. The agent can self-register with these servers using OAuth Dynamic Registration (↗).

-

Once registered the agent can list all the tools a server has and call them based on the metadata supplied by them.

✅ Pros:

- No integration required ahead of time. Thanks to the registry and dynamic registration new tools can be integrated on the fly.

- More composable and modular, tools can be combined in new ways based on the goal at hand.

- Maintenance of tools is handled by the third party who owns the MCP server.

❌ Cons:

- Whilst we believe MCP will be a big part of the puzzle in the future, current implementations are somewhat lacking. There are few registries and authentication has only been added to the standard(↗) in the last couple of days.

- Even with good implementations in the future, the quality of tools can vary largely and agents will need to be able to identify good servers/tools for good performance.

5. Agent <> Agent interfaces

Instead of imperative API calls (POST /create_user), in the future declarative workflows will let agents specify what they want to achieve, and the external system will determine how to execute it. We see some natural language APIs today but true flexibility will be achieved when we can have inter-system agent handoff.

Note we see this as different to intra-system agent handoffs; many multi-agent systems today allow you to have agents talking to agents, but we believe in the future the interface between systems will also be agent <> agent.

Example:

-

Instead of calling your billing software APIs manually, an agent would submit a high-level goal:

"Ensure customer X has an active subscription and has been notified of their renewal"

-

The system maps this to the correct sequence of operations, handling retries and dependencies automatically.

✅ Pros:

- Shifts complexity from the agent to the software, moving the need to understand the domain logic of the problem to the party with the most context. In the above example I don’t need to understand how a user entity relates to a subscription and the set of API calls to update both.

- Removes overhead of API management, versioning etc since execution logic is abstracted.

❌ Cons:

- Will require strong guardrails, authentication controls, and human in the loop controls etc.

- This is a new paradigm in software engineering and this may limit adoption.

Bringing it all together

At Portia, we’re excited about the future of agent-first software interfaces. The limitations of traditional APIs don’t mean the end of structured integrations, but rather the beginning of a new era—one where software exposes its capabilities in ways that agents can reason about, adapt to, and dynamically interact with.

From LLM-assisted smart tools to dynamically discoverable interfaces like MCP, the path forward is clear: agents need more flexible, self-describing, securely authenticated and goal-oriented mechanisms to interact with software.

We believe the best agent <> software interfaces are still ahead of us, and we’re excited to push the boundaries. If you’re thinking about these challenges too, we’d love to hear your thoughts!